Are your Custom Copilots ethical by design? Here’s how Australia’s AI Principles and Guardrails shape the future of AI solutions

With the rise of AI-powered copilots across industries, there’s a growing tension between innovation and accountability. In Australia, we’re not waiting for regulation to tell us what not to do—we’re setting the bar for what we should be doing with two powerful frameworks: Australia’s AI Ethics Principles and the Voluntary AI Safety Guardrails.

And if you’re building Custom Copilots (like I often do with Microsoft’s AI stack), these aren’t just nice-to-haves. They should shape how you design, build, test, and deploy.

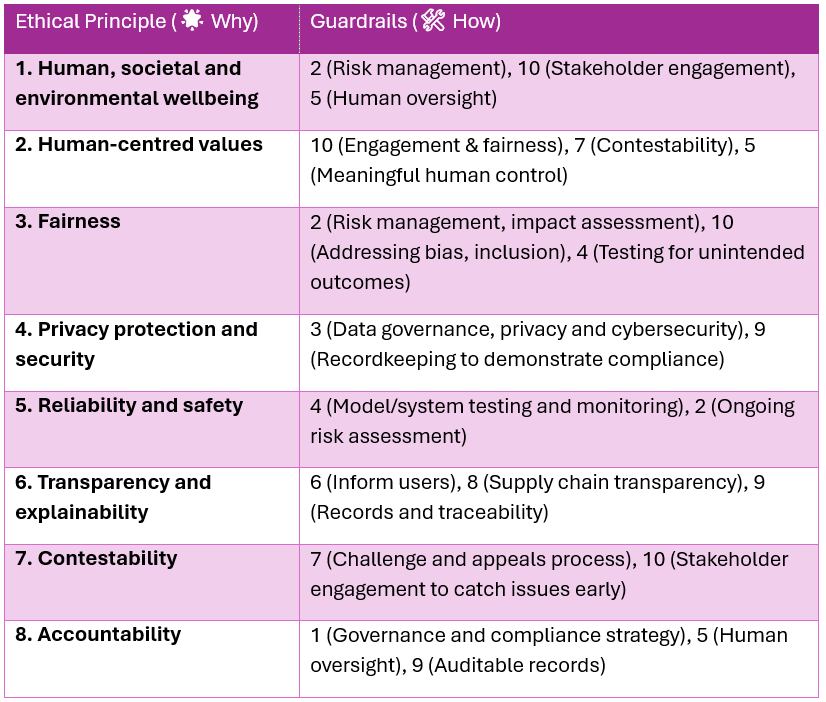

The Ethical Principles describe what we aim to achieve — they’re the values or goals. The 10 Guardrails describe how to achieve them — they’re the actions or processes.

Think of it like this, if building responsible AI were like constructing a house:

- The Ethical Principles are your architectural values (e.g. sustainability, safety, community focus).

- The 10 Guardrails are your building codes and inspections — the concrete things you do (like wiring checks and fire exits) to ensure the home lives up to those values.

🔍 Breaking Down Australia’s AI Ethics Principles

Understanding the why behind responsible AI (And how they apply to real-world AI solutions like Custom Copilot development)

1. Human, Societal and Environmental Wellbeing

AI should do more than just optimise a business process—it should benefit people and the planet.

For Custom Copilots, this could mean:

- Designing solutions that reduce repetitive work to improve employee wellbeing.

- Being mindful of how automation may impact employment or workload redistribution.

- Evaluating whether your AI use supports or undermines environmental sustainability goals.

🛠 Example: A Copilot that drafts documentation could include logic to highlight sustainable alternatives or reduce redundant outputs to save storage and energy.

2. Human-Centred Values

Respect for human rights, autonomy, and dignity should be built into your AI from the start.

This means:

- Users should always retain control over AI decisions.

- AI should support—not override—user agency.

- Systems must respect cultural diversity, including Indigenous values and knowledge systems.

🛠 Example: A Copilot recommending employee bonuses or job candidates must be reviewed by a human decision-maker, and designed with bias mitigation and fairness in mind.

3. Fairness

AI must be inclusive, accessible, and free from unjust discrimination.

That involves:

- Reviewing training data for bias or exclusion of vulnerable groups.

- Ensuring solutions work for people with disabilities, low digital literacy, or different languages.

- Avoiding the reproduction of historic inequalities through automation.

🛠 Example: If a Copilot helps prioritise service requests, it must not unintentionally disadvantage certain demographics based on postcode, surname, or device type.

4. Privacy Protection and Security

Trustworthy AI protects the most precious digital asset—data.

This means:

- Clear data governance practices for what’s collected, stored, and shared.

- Strong cybersecurity measures to prevent data breaches or malicious use.

- Transparent choices about how personal or sensitive data is used to train AI.

🛠 Example: A Custom Copilot accessing customer data must adhere to least privilege access, use secure APIs, and log all interactions for auditability.

5. Reliability and Safety

AI should function as intended, with minimal risk of harm.

This requires:

- Rigorous testing before deployment (and ongoing post-deployment).

- Accounting for edge cases and failure modes.

- Ensuring AI behaviour stays within its intended scope—no surprises.

🛠 Example: If a Copilot suggests policy wording, it must be consistently reviewed to prevent misleading, outdated, or risky advice from being published.

6. Transparency and Explainability

People should be able to understand when and how AI is influencing outcomes.

This involves:

- Notifying users when AI is involved in decision-making.

- Explaining what data or logic influenced the result.

- Avoiding the “black box” effect—especially for high-impact decisions.

🛠 Example: A Copilot that proposes actions in a CRM should clearly show the source of its recommendation, e.g. “Based on client sentiment and activity in the last 30 days.”

7. Contestability

People should be able to challenge or appeal outcomes that affect them.

This means:

- Having clear channels for users to request a review or correction of AI-influenced decisions.

- Ensuring decisions can be reversed or re-examined by a human.

- Logging decisions and rationale for auditing.

🛠 Example: If a Copilot denies a service upgrade, the user should have a way to challenge that through a built-in workflow or feedback channel.

8. Accountability

Someone must always be responsible for AI outcomes—no passing the buck to “the algorithm.”

This involves:

- Assigning clear ownership of AI use cases.

- Documenting decisions made during development.

- Enabling human oversight and intervention throughout the AI lifecycle.

🛠 Example: Every Copilot deployment should include documentation of its purpose, intended outcomes, and responsible parties—from development to support.

The Guardrails: From ethics to execution

The 10 Voluntary safety Guardrails turn those ethical principles into practical steps. And for those of us delivering custom solutions using AI capabilities like Azure OpenAI, they’re gold.

🔟 The 10 AI Guardrails Explained

From foundations to fairness: What responsible AI looks like in practice

1. Accountability Processes

🧩 Establish governance, capability, and compliance strategies.

To responsibly use AI, your organisation must:

- Appoint an AI lead or owner responsible for oversight.

- Develop an AI strategy that aligns with your mission and risk appetite.

- Provide staff training on ethical AI, data governance, and relevant regulatory obligations.

📌 Tip: Document a governance model that outlines who approves, monitors, and reviews AI use—and how issues get escalated.

2. Risk Management

⚖️ Continuously identify, assess, and mitigate potential harms.

Establish and implement a risk management process to identify and mitigate risks.

Implement a risk framework that:

- Considers risks across social, ethical, operational, and technical domains.

- Includes ongoing impact assessments, not just a one-time review.

- Uses input from stakeholder engagement (see guardrail 10) to uncover blind spots.

📌 Tip: Use a risk register specifically for AI, including prompts and model updates, and review it regularly.

3. Data Governance & Protection

🔐 Manage the quality, origin, and security of data used in AI systems.

Protect AI systems, and implement data governance measures to manage data quality and provenance.

You must ensure:

- Data quality: Clean, complete, and fit for purpose.

- Data provenance: You know where it came from, how it was obtained, and that it’s lawful to use.

- Cybersecurity: Protect models from tampering, prompt injection, or data leakage.

📌 Tip: For generative AI or copilots, audit input and output data to prevent exposure of sensitive information.

4. Testing and Monitoring

🧪 Test before deployment and monitor continuously.

Test AI models and systems to evaluate model performance and monitor the system once deployed.

Before rollout:

- Validate models against pre-defined acceptance criteria.

- Simulate edge cases and adverse scenarios. After launch:

- Monitor for drift, degradation, or unintended outcomes.

- Include logging and alerting for anomalous behavior.

📌 Tip: Set model health KPIs (accuracy, bias, latency) and track them like you would with traditional systems.

5. Human Oversight

🧍 Ensure people can understand, guide, and override AI decisions.

AI systems must allow:

- Human intervention at critical points in the lifecycle.

- Oversight mechanisms that are context-aware and role-based.

- Controls for users to review and correct AI-generated content or outputs.

📌 Tip: For copilots, include an “edit before send” step before any communication or action is taken based on AI.

6. User Awareness

💬 Disclose when AI is used and how.

Inform end-users regarding AI-enabled decisions, interactions with AI and AI-generated content.

Transparency builds trust. You must:

- Clearly notify users when they’re interacting with AI.

- Explain the role of AI in decision-making or content creation.

- Use context-appropriate methods (e.g. labels, tooltips, onboarding guides).

📌 Tip: Say “This message was drafted by AI—please review before sending” or “You’re now chatting with an AI assistant.”

7. Contestability

📢 Allow users and stakeholders to challenge AI outputs.

Establish processes for people impacted by AI systems to challenge use or outcomes.

You need clear processes that let people:

- Challenge or appeal AI-based outcomes.

- Escalate concerns to a human for review.

- Understand why a decision was made (link to guardrail 6).

📌 Tip: Provide a “Request human review” button and document how challenges are handled and resolved.

8. Supply Chain Transparency

🔗 Collaborate across the AI lifecycle—including third-party providers.

You must:

- Disclose the source of your AI systems (e.g. Microsoft Azure OpenAI, third-party APIs).

- Share details on data and model use with partners or downstream users.

- Understand what’s in the black box—especially if you didn’t build it.

📌 Tip: Include transparency clauses in vendor contracts to mandate disclosure of training data and model limitations.

9. Recordkeeping

📝 Maintain documentation for compliance and accountability.

Keep and maintain records to allow third parties to assess compliance with guardrails.

Keep records of:

- AI system inventories and use cases.

- Decisions made during AI lifecycle design and risk assessment.

- Testing results, changes, and incident logs.

📌 Tip: Use a centralised AI register that links use cases to risk ratings, oversight owners, and documentation history.

10. Stakeholder Engagement

🤝 Engage communities with a focus on fairness, inclusion, and safety.

Ensure you:

- Consult people who may be impacted—especially marginalised groups.

- Understand stakeholder expectations, needs, and concerns.

- Take active steps to identify and reduce bias.

📌 Special Note on First Nations data: If using data about or from Indigenous communities, follow Indigenous Data Sovereignty Principles—including informed consent and the right to withdraw.

✅ How to Apply These Guardrails

- Start with Guardrail 1 to build foundations.

- Adopt all 10—they work together.

- Apply them at both the organisation level and AI system level.

- Use them as living practices—not a one-time checklist.

These guardrails are voluntary, but following them positions your organisation to meet future regulations and align with global best practices.

Why this matters

These guardrails and principles aren’t just about compliance. They’re about trust.

As AI becomes more embedded in business processes, our responsibility as solution architects and developers grows. Building copilots that solve business problems is table stakes—building ones that also align with ethical and societal values is leadership.

And while these standards are currently voluntary in Australia, they align with global frameworks like ISO/IEC 42001 and the US NIST AI Risk Framework. This means starting now puts you ahead of the curve.

Final thoughts

If you’re building custom AI copilots and haven’t yet incorporated these frameworks—now’s the time. Whether you’re working with government, healthcare, or enterprise sectors, embedding responsible AI practices from day one is the key to long-term success.

Let’s build AI that’s not only smart—but safe, inclusive, and human-centred.

Leave a Reply