Generative AI has captivated industries, redefined creativity, and accelerated innovation—but its journey didn’t begin with ChatGPT or DALL·E. Like all revolutions, it began with quiet, foundational work that grew in sophistication over decades.

Here’s a look at how generative AI evolved—from early logic-based systems to today’s creative collaborators.

🌱 1950s–1960s: Seeds of Possibility and Simulated Conversation Long before the internet or smartphones, researchers began exploring how machines could “learn” from data and mimic human communication. One standout example: ELIZA, developed in 1964, which simulated conversation using basic pattern matching. It wasn’t truly intelligent, but it sparked a new way of thinking about human, machine interaction.

🔹 Key moments:

- Alan Turing proposes the Turing Test (1950)

- Early rule-based AI systems and symbolic logic

- ELIZA shows early promise for generative dialogue

These were the philosophical and technical seeds that hinted at what generative AI could become.

🧠 1980s–1990s: Neural Networks Begin to Form With better hardware and more advanced programming, researchers began experimenting with neural networks, algorithms inspired by the structure of the human brain. These early networks were small and expensive to train, but they marked a shift away from fixed rules toward pattern recognition.

🔹 What changed:

- Development of backpropagation techniques

- The first inklings of self-learning systems

- Growing emphasis on pattern detection and adaptive models

Generative AI moved from programmed rules to models that could “learn” structure within data.

🚀 2000s–2010s: Deep Learning and the Data Explosion A breakthrough arrived in the early 2000s: deep learning—neural networks with multiple layers trained on vast datasets. Coupled with improvements in GPUs, cloud computing, and internet-scale data, AI systems took a leap forward in both power and creativity.

🔹 Technologies fueling this leap:

- Convolutional Neural Networks (CNNs) for image understanding

- Recurrent Neural Networks (RNNs) for sequential data like language

- Rise of Variational Autoencoders (VAEs) to generate synthetic data

- Introduction of Transformer models near the decade’s end

For the first time, AI wasn’t just learning, it was generating: writing paragraphs, composing music, creating new faces.

🎨 2014–2015: GANs and the Creativity Boom In 2014, Ian Goodfellow introduced Generative Adversarial Networks (GANs), a game-changer. GANs paired two neural networks (a generator and a discriminator) in a creative tug-of-war to produce images, videos, and even 3D objects that felt strikingly human-made.

Just a year later, diffusion models emerged, generating content by starting with noise and refining it into high-quality, realistic images.

🔹 Major breakthroughs:

- GANs unlock ultra-realistic images, style transfer, deepfakes

- Diffusion models open up a new way of thinking about generation

- Generative AI expands across industries: from fashion to finance

AI was no longer just a tool, it became a co-creator.

📚 2020–2025: The Rise (and Race) of Foundation Models The 2020s marked a major shift—from narrow, task-specific models to foundation models that could reason, write, design, code, and even collaborate. OpenAI’s GPT-3 in 2020 kicked off a wave of language models trained on unprecedented volumes of data. By 2025, models had grown not just in size but in capability—offering multi-modal interaction across text, images, audio, and video.

🔹Across the tech landscape, the LLM race accelerated:

- OpenAI evolved through GPT-4 and 4.5

- Google’s Gemini series emphasized multi-modal intelligence

- Anthropic, Mistral, Cohere, and others entered the scene with unique architectures and safety models Enterprise platforms matured, allowing organizations to train or fine-tune their own secure, domain-specific models

These weren’t just tools for chatting—they became copilots, creative partners, and critical thinking aids across disciplines.

💡 2025 and Beyond: Everyday Magic Generative AI has become deeply embedded in how we work, solve, and create. What was once experimental is now essential—integrated into design tools, business platforms, healthcare systems, and education.

🔍 Current real-world applications include:

- 🎨 Art & entertainment: Storyboarding, music composition, game development

- 🩺 Healthcare: AI-assisted diagnosis, drug discovery, personalized treatment plans

- 📈 Business & productivity: Document drafting, customer engagement, product design, strategic planning

- 👩💼 Workforce transformation: Copilots for developers, marketers, analysts, and educators

🔮 As we look ahead, the questions shift from “What can AI do?” to “What should we do with AI?” Ethics, governance, and creativity are now front and centre. As diffusion models, multimodal LLMs, and enterprise AI platforms continue to evolve, we’re only beginning to explore what’s possible. As we look ahead, the questions shift from “What can AI do?” to “What should we do with AI?” Ethics, governance, and creativity are now front and centre.

We’re not just using AI—we’re partnering with it.

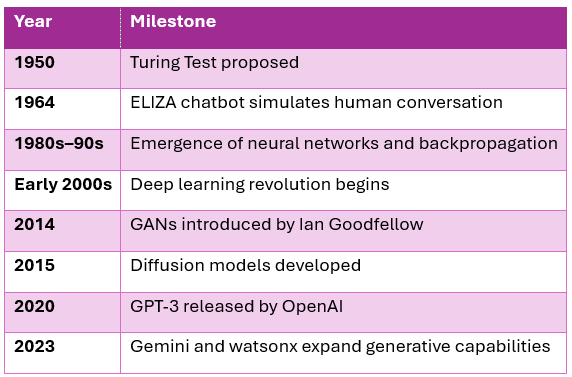

🔁 A Brief Timeline of Key Milestones

🌐 From Algorithms to Imagination

Generative AI’s journey spans over 70 years of theory, experimentation, and evolution. Each generation of innovation—from chatbots to diffusion models—built on the shoulders of the last.

We’re no longer asking “Can machines think?” Now, we’re asking: “What can we imagine together?”

What part of this evolution excites you most? Are we nearing the peak, or just getting started?

👇 Let’s discuss in the comments.

Leave a Reply